Introduction

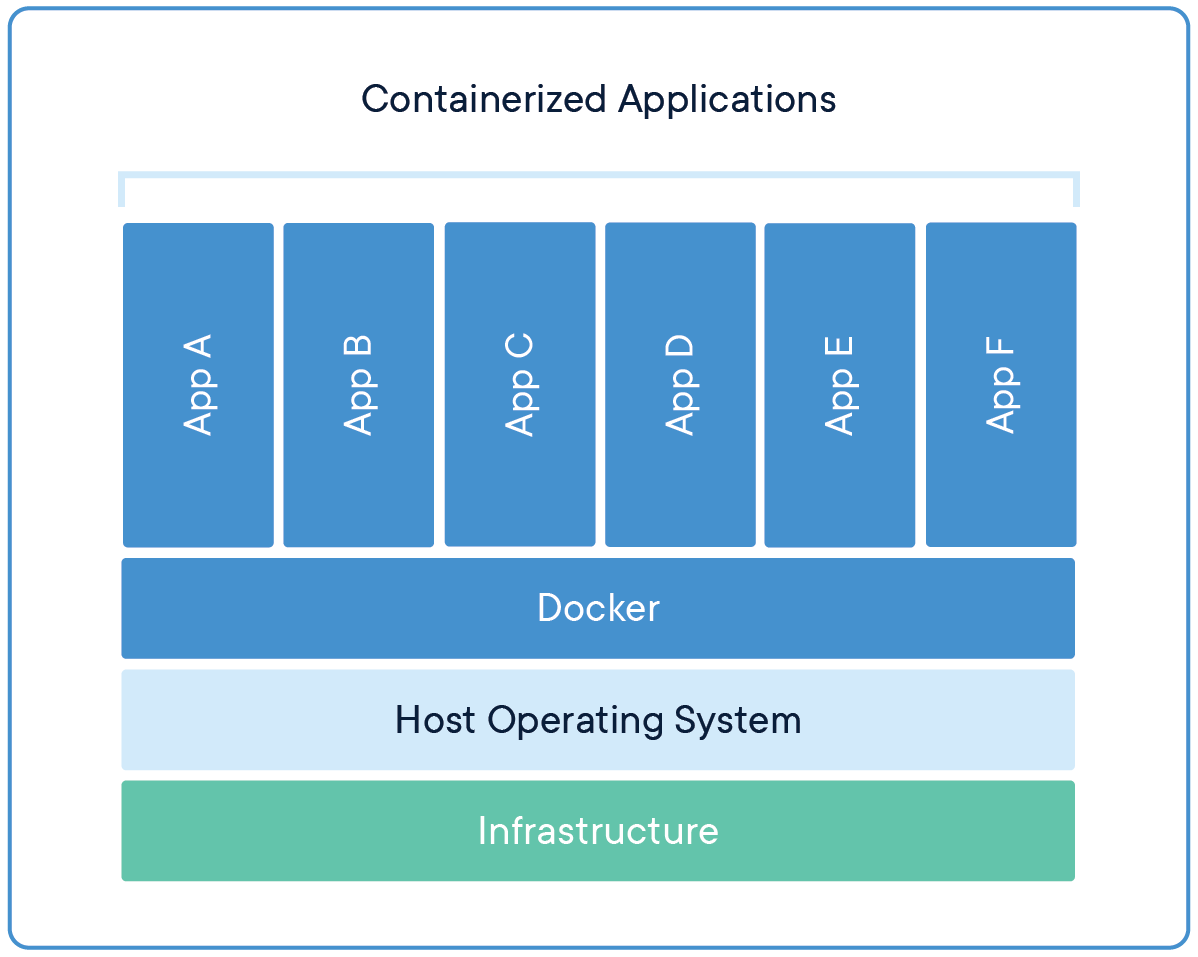

Docker is a platform for building, shipping, and running distributed applications. It enables developers to create lightweight, portable containers for their applications containing all dependencies needed, making them easy to manage, test, and deploy across multiple environments. In this article, we will explore the basics of Docker, including its architecture and components, and provide a practical tutorial for using Docker to build and deploy applications. By the end of this article, you will have a solid understanding of Docker and how to use it to accelerate your application development and deployment.

Deployment Problem Evolution

Over the years, deployment technologies have evolved significantly, from manual server setup to virtual machines and containerization. While virtual machines helped to improve deployment efficiency, they still had limitations, such as being resource-intensive and requiring a full operating system. This is where Docker containers come in. Docker containers are a lightweight and portable solution that contain all the dependencies needed for an application to run, making them independent of the underlying operating system. This means developers can easily package and deploy their applications in a consistent and reproducible way, without worrying about compatibility issues or complex configuration. As a result, Docker containers have become the simplest and most efficient way to deploy applications in modern software development.

Containerizing Your App with Docker

To containerize an application using Docker, a developer typically follows these steps:

Write a Dockerfile: A Dockerfile is a script that specifies the application, its dependencies, and how it should be built and run inside a container.

Build the Docker image: Using the Dockerfile, the developer can build a Docker image that contains the application and its dependencies. This image can be stored and distributed as a single, portable unit.

Run the Docker container: Once the image is built, the developer can run a Docker container from the image, which will run the application in an isolated environment. The container can be started, stopped, and managed using Docker commands.

Test and deploy the containerized application: The developer can now test the containerized application in a local or remote environment, and deploy it to production when ready.

Docker Objects

After having a quick overview of the containerization process, let's dive deeper into Docker Objects. Docker has many objects and these objects include:

Images

An image is a lightweight, read-only template that contains the application code, libraries, and dependencies needed to run an application. Images are created from a Dockerfile, which specifies the application environment and how to build the image. Docker images can be stored and distributed as a single, portable unit, making it easy to share and deploy applications across different environments.

Containers

A container is a runtime instance of an image, which provides an isolated environment for an application to run. Containers are created from Docker images, and can be started, stopped, and managed using Docker commands. Each container is completely isolated from the host system and other containers, ensuring that applications can run consistently across different environments.

Volumes

If you run a docker container and then stop it, all the data stored while using the container will be lost. Volumes solve this issue as they are a persistent data storage mechanism that is used by containers to store and share data. Volumes can be used to store files, configuration data, and other application data that needs to persist across container restarts.

Networks

Docker networks are virtual networks that provide a mechanism for connecting containers to each other, as well as to other services and resources on a network. Docker networks allow for secure communication between containers and can be used to isolate containers from each other or from the host system.

Underlying Architecture

Client

The Docker client is a command-line tool that is used to interact with the Docker daemon and manage Docker containers. The Docker client can be used to build, run, and manage containers, as well as to manage Docker images, networks, and volumes. So, when you run a command in your terminal such as docker build . , this uses the docker client.

Daemon

The Docker daemon is a background process that runs on the host machine and manages Docker containers. The Docker daemon is responsible for starting and stopping containers, managing container resources, and providing access to Docker images and other resources.

Registry

The Docker registry is a service that stores and distributes Docker images. The most popular registry is Docker Hub, which is a public registry that hosts thousands of images that can be used to run applications in containers. Private registries can also be created to store and distribute custom Docker images within an organization.

Practical Tutorial (on Ubuntu)

Now, after having a good overview about docker, let's make our hands dirty by building a docker image and running a container. Go through this tutorial and don't overthink about the commands as they will be explained in more details in the next section.

Firstly, let's create a simple nodeJS server in a file called server.js:

const http = require('http');

const hostname = '0.0.0.0';

const port = 3000;

const server = http.createServer((req, res) => {

res.statusCode = 200;

res.setHeader('Content-Type', 'text/plain');

res.end('Hello World\n');

});

server.listen(port, hostname, () => {

console.log(`Server running at http://${hostname}:${port}/`);

});

Test the server locally first by running node server.js

Now, let's containerize the application with its dependencies. Firstly let's create a Dockerfile. Create a file called Dockerfile in the same directory as your server and write the following code:

# Use an official Node.js runtime as a parent image

FROM node:14

# Set the working directory to /app

WORKDIR /app

# Copy the current directory contents into the container at /app

COPY . /app

# Install any needed packages specified in package.json

RUN npm install

# Make port 3000 available to the world outside this container

EXPOSE 3000

# Define environment variable

ENV NAME World

# Run server.js when the container launches

CMD ["node", "server.js"]

Save the docker file and run build the image using this command:

docker build -t my-node-server .

After the image is built, run a container using this command:

docker run -p 3000:3000 my-node-server

Now, if you visit localhost:3000 you should see your server responding.

Congratulations! You have just built your nfirst docker app.

Commands

Dockerfile Commands

The following list contains the most popular dockerfil commands and their use:

FROM: Specifies the base image for the Dockerfile. This command is used to select an existing image on which to build the new image.WORKDIR: Sets the working directory for any subsequent commands in the Dockerfile. This command is used to create a directory inside the container where files can be stored.CMD: Specifies the command to run when the container starts. This command is used to start the application inside the container. If multiple CMD commands exist in the dockerfile, only the last one will be executed.RUN: Executes a command in the container during the build process. This command is used to install dependencies or perform other actions needed to prepare the container for running the application.ARG: Defines a variable that can be passed to the Dockerfile at build time. This command is used to pass arguments to the Dockerfile when building the image.ENV: Sets an environment variable in the container. This command is used to pass environment variables to the application running inside the container.COPY: Copies files from the host machine into the container. This command is used to copy the application code and other files into the container during the build process.ADD: Similar toCOPY, but can also download files from a URL and extract files from archives. This command is used to copy or download files into the container during the build process. However, it is recommended to useCOPYinstead ofADDwhenever possible.

Docker Client Commands

A brief explanation of some common Docker client commands and their tags:

docker build .: Command used to build a Docker image from a Dockerfile. Tags include-tto specify the name and optional tag for the image,--no-cacheto disable caching during the build process, and--build-argto pass build-time arguments to the Dockerfile.docker run image: Command used to run a Docker container from an image. Tags include-dto run the container in detached mode,-pto map container ports to host ports,-itto run the container interactively with a terminal, and-vto mount a host directory as a data volume inside the container.docker ps: Command used to list running Docker containers. Tags include-ato list all containers,-qto list only the container IDs, and-fto filter the output based on various criteria (e.g. container name, image name, etc.).docker stop <container_id>: Command used to stop a running Docker container. Tags include-tto specify a timeout period before the container is forcibly stopped, and-fto force the container to stop immediately.docker images: Command used to list available Docker images. Tags include-ato list all images,-qto list only the image IDs, and--filterto filter the output based on various criteria (e.g. image name, label, etc.).docker rmi <image_id>: Command used to remove a Docker image. Tags include-fto force the removal of an image and its associated containers.docker exec <container_id> command: Command used to execute a command inside a running Docker container. Tags include-itto run the command interactively with a terminal.docker logs <container_id>: Command used to view the logs of a running Docker container. Tags include-fto follow the logs in real-time.docker inspect <container_id>: Command used to view detailed information about a Docker container. Tags include--formatto output the information in a specific format.... and many more commands and tags!

Conclusion

In conclusion, Docker has revolutionized the way applications are built, shipped, and run. With Docker, developers can create lightweight and portable containers for their applications containing all dependencies needed, making them easy to manage, test, and deploy across multiple environments. Docker containers have become the simplest and most efficient way to deploy applications in modern software development. In this article, we explored the basics of Docker, including its architecture and components, and provided a practical tutorial for using Docker to build and deploy applications. Docker is a powerful tool that every developer should learn and master to accelerate their application development and deployment.